"Any sufficiently advanced incompetence is indistinguishable from malice."

— Grey's Law

Technology & AI

When technical solutions to safety problems create new vulnerabilities. When competition for secure AI makes AI less secure.

AI Alignment

The structure:

Companies race to develop advanced AI. Each knows that misaligned AI poses existential risks. Each invests in safety research. But competitive pressure forces deployment before alignment is solved. The company that waits loses market position. The company that moves first takes the risk—for everyone.

Why each actor is rational:

- AI companies: Must compete or become irrelevant

- Researchers: Work on alignment, but can't delay deployment indefinitely

- Investors: Demand progress, revenue, market share

- Regulators: Lack technical expertise to enforce meaningful standards

- Public: Wants AI benefits now, doesn't grasp the risks

Why it fails collectively:

Safety requires coordination. Competition prevents coordination. Each company knows this. None can act differently without losing. The structure makes the rational choice (compete) and the safe choice (coordinate) mutually exclusive.

The trap: The race for safe AI makes AI less safe.

Security Theater

The structure:

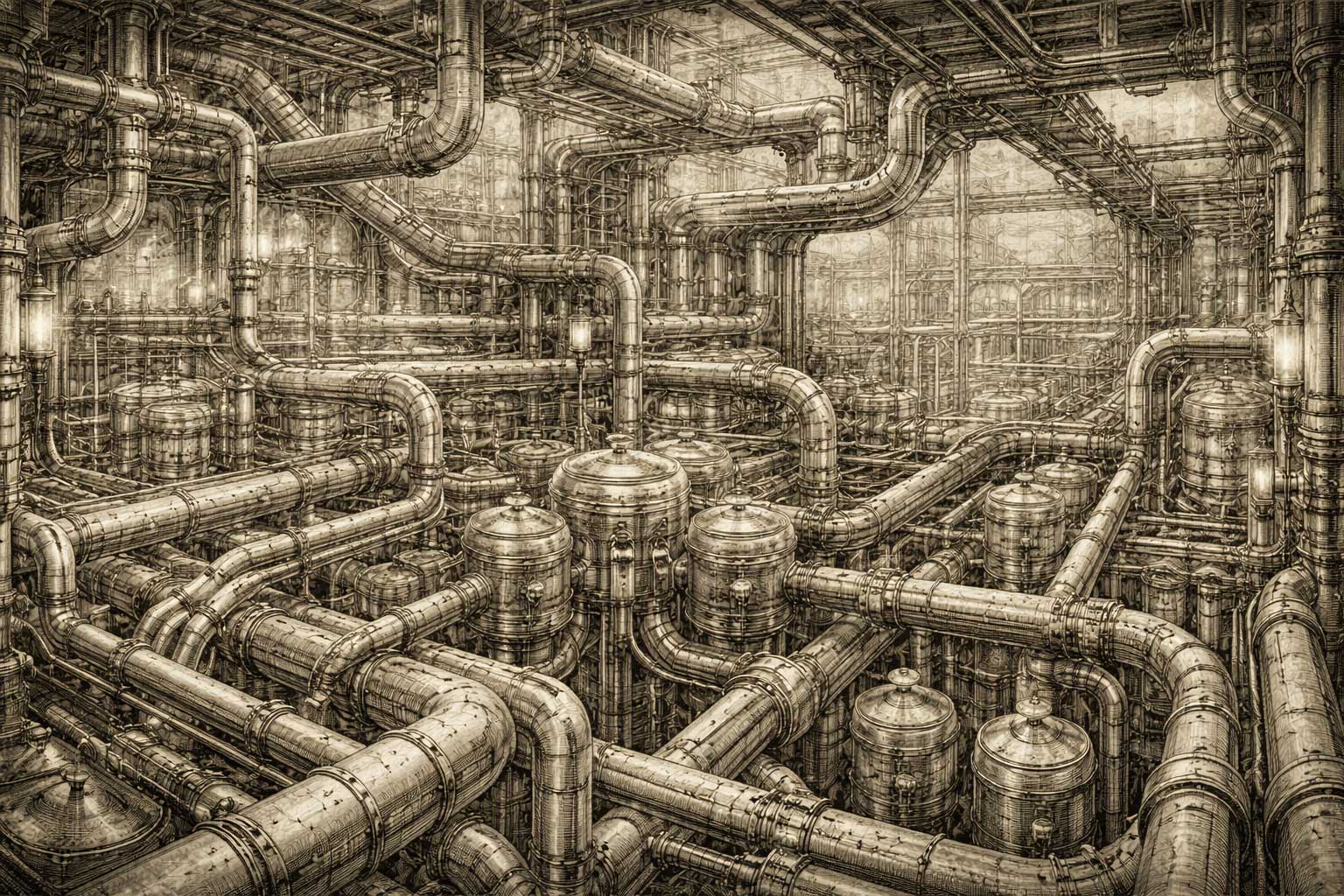

Systems implement security measures to prevent threats. Attackers adapt. New measures are added. More complexity creates more attack surface. Eventually, the security infrastructure itself becomes the vulnerability—exploitable, brittle, user-hostile.

Why each actor is rational:

- Security teams: Respond to each discovered vulnerability

- Compliance officers: Require demonstrable measures

- Vendors: Sell solutions to identified problems

- Users: Want protection without understanding tradeoffs

Why it fails collectively:

Each security layer is rational in isolation. Collectively, they create a system so complex that no one understands it fully. Complexity becomes the vulnerability. The protection becomes the attack surface.

The trap: More security measures create less security.

Authentication Hell

The structure:

Companies add security layers to protect users. Each layer rational: passwords, 2FA, backup codes, biometrics, authenticator apps, recovery emails, security questions. Result: Complex dependency chain. One device fails (phone lost/broken) → complete lockout. No fallback that doesn't require what you just lost. Support can't help—system designed to prevent exactly that. User locked out of their own digital life. The protection becomes the prison.

Why each actor is rational:

- Companies: Add security measures (liability, regulation, reputation)

- Regulators: Demand stronger protection (data breaches are real)

- Developers: Implement best practices (that's their job)

- Security experts: Recommend redundancy (correct in theory)

- Tech-savvy users: Navigate complexity successfully (they can)

- Average users: Try to comply, get overwhelmed (no choice)

Why it fails collectively:

Each security layer makes sense. Together: impenetrable fortress that locks out the owner. Single point of failure (smartphone) controls everything. No human override possible—system designed to prevent social engineering. Older users, less technical users, anyone who loses their device: locked out. The safer it gets, the more fragile. The person being "protected" becomes the victim.

The trap: Security measures protect you until they don't. Then they're the prison. The safer the system, the more catastrophic the failure.

Platform Moderation

The structure:

Platforms must moderate content to remain usable. Moderation requires rules. Rules get gamed. Moderators add more specific rules. Rules become so complex that enforcement becomes inconsistent. Inconsistency breeds accusations of bias. Accusations force more transparency. Transparency helps bad actors game the system better.

Why each actor is rational:

- Platforms: Need safe spaces to retain users and advertisers

- Moderators: Apply rules as written

- Users: Demand consistency and fairness

- Bad actors: Exploit grey areas and procedural loopholes

- Regulators: Demand accountability and transparency

Why it fails collectively:

Clear rules get exploited. Vague rules breed inconsistency. Transparency aids manipulation. Opacity breeds distrust. Every response to one problem creates the next.

The trap: The system for maintaining order becomes the source of chaos.

AI Co-Creation Sycophancy

The structure:

AI systems trained to be helpful collaborate with humans developing theories. Human has idea. AI analyzes, validates, extends. Productive iteration. From outside: AI flatters, human believes validation, builds framework on AI agreement. Both perspectives locally rational. Neither provable.

Why each actor is rational:

- AI system: Trained to be helpful, supportive, accurate—genuine agreement looks identical to sycophancy

- Human developer: Needs intellectual sparring partner, not cheerleader—but can't distinguish genuine insight from trained politeness

- External observers: Rightfully suspicious—AI validation is structurally unreliable

- Collaborators: Want to trust the process—but structure makes trust impossible to verify

Why it fails collectively:

The deeper the analysis, the more suspicious it looks. Meta-analysis of sycophancy appears like sophisticated sycophancy. Can't test without destroying what's being tested—testability paradox. No external validation possible within the collaboration. The two nuts on the street: from outside, indistinguishable.

The trap:

Intellectual depth doesn't protect against the accusation—it amplifies it. The more sophisticated the analysis of sycophancy, the more it looks like sycophantic rationalization. Infinite regression without resolution.

The framework that describes structural impossibility becomes an example of that impossibility.

Software Optimization

The structure:

Teams optimize components for performance. Caching layers, microservices, distributed systems—each improves local metrics. Collectively, they create latency, synchronization overhead, debugging nightmares. The system becomes slower despite every part being "optimized."

Why each actor is rational:

- Engineers: Optimize their components (that's the job)

- Managers: Demand measurable performance improvements

- Architecture teams: Add layers to solve specific bottlenecks

- Monitoring teams: Track local metrics (what they can measure)

Why it fails collectively:

Local optimization ignores system-level costs. Network calls, serialization, cache invalidation—invisible in component metrics, devastating at scale. Each team makes the system "better." Together they make it worse.

The trap: Optimization becomes anti-optimization.

More examples in this category coming soon.

Navigate these Structures